本資料は2020年07月11日に社内共有資料として展開していたものをWEBページ向けにリニューアルした内容になります。

■Contents

- Concept/Motivation

- Recent trends on XAI

- Method 1: LIME/SHAP

- Example: Classification

- Example: Regressio

- Example: Image classification

- Method 2: ABN for image classification

■Concept/Motivation

Generally speaking, AI is a blackbox.We want AI to be explainable because…

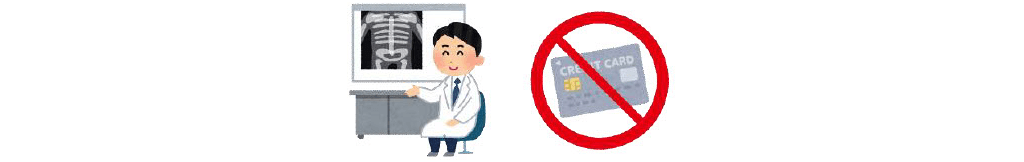

1. Users should trust AI to actually use it (prediction itself, or model)

Ex: diagnosis/medical check, credit screening

G. Tolomei, et. al., arXiv:1706.06691

People want to know why they were rejected by AI screening, and what they should do in order to pass the screening.

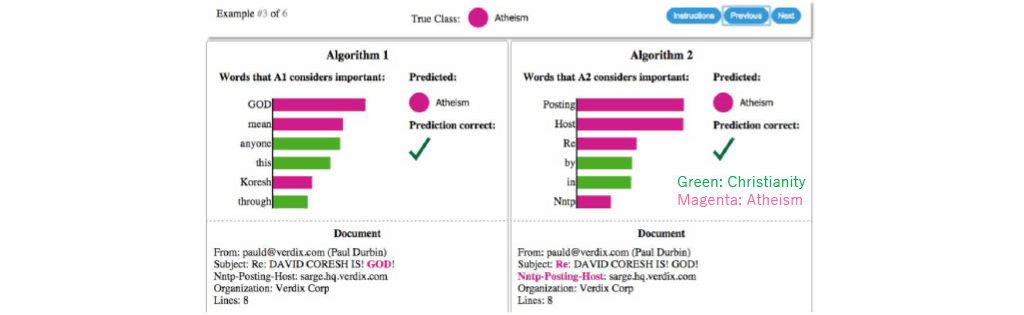

2. It helps to choose a model from some candidates

Classifier of text to “Christianity” or “Atheism” (無神論)

Both model give correct classification,

but it is apparent that model 1 is better than model 2.

3. It is useful to find overfitting, when train data is different from test data

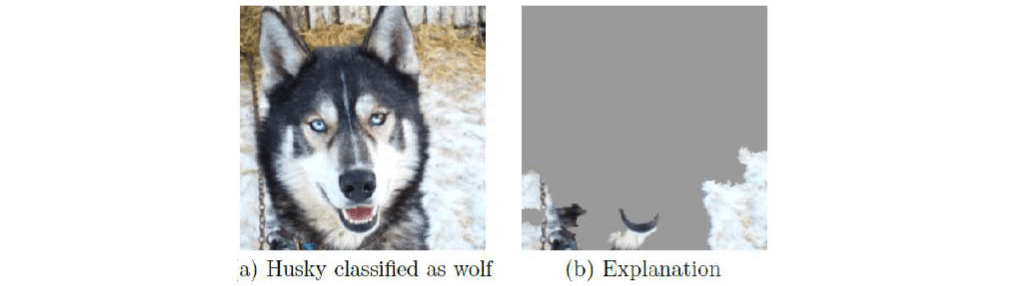

Cf: Famous example of “husky or wolf”

Training dataset contains pictures of wolfs with snowy background.

Then, the classifier trained on that dataset outputs “wolf” if the input image contains snow.

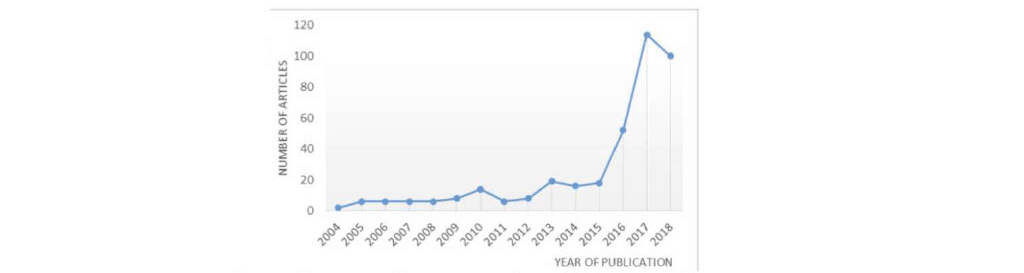

■Recent trends

# of papers which includes one of explanation-related words (“intelligible”, “interpretable”,…)

AND

one of AI-related words (“Machine learning”, “deep learning”,…)

FROM

7 repositories (arXiv, Google scholar, …)

Recently, researchers are studying XAI more and more.

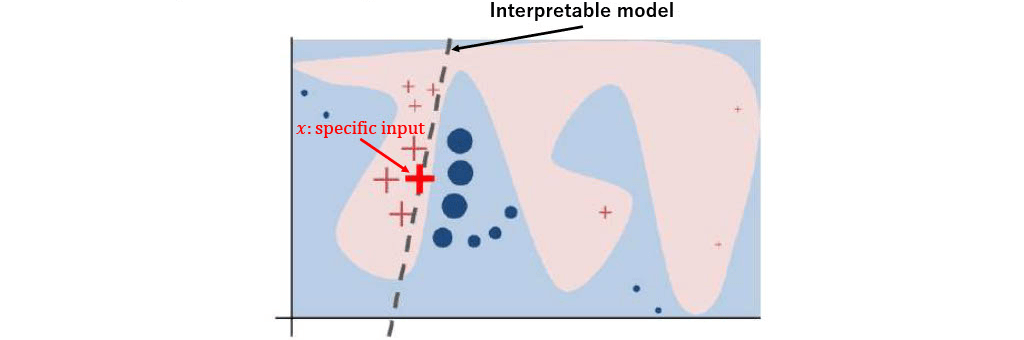

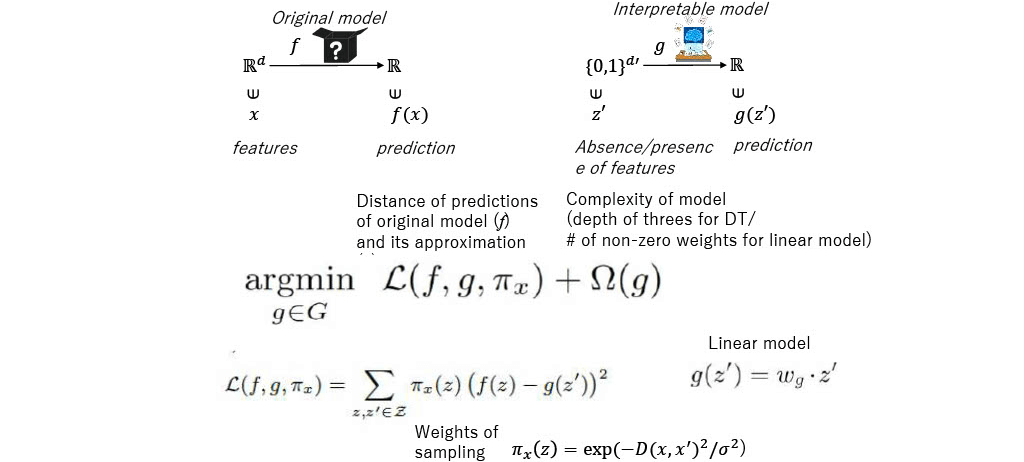

■Method 1: Local Interpretable Model-agnostic Explanations (LIME)

Objects: Classifier or Regressor

M. Ribeiro, S. Singh, C. Guestrin, arXiv:1602.04938

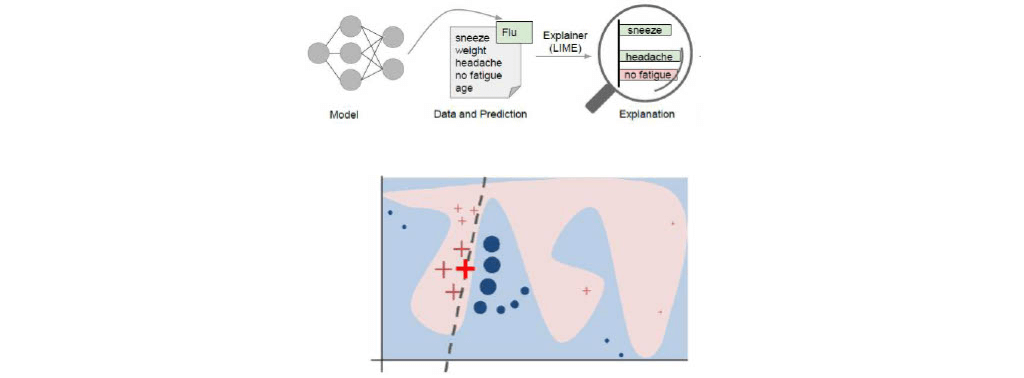

Basic idea: Approximate original ML model with interpretable model

(linear model/DT), in the vicinity of specific features.

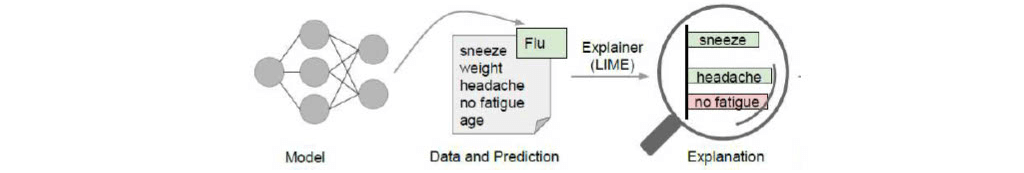

■Explaining individual explanation

- Original model predicts from features (sneeze, headache,…) whether the patient is flu or not.

- LIME approximates the model with linear model in the vicinity of the specific patient.

- The weights of the linear model for each feature give “explanation” of the prediction

■desirable features of LIME

- Interpretable

- Local fidelity

- Model-agnostic

(Original model is not affected by LIME at all) - Global perspective

(sample different inputs and its predictions)

■Shapley Additive exPlanations (SHAP)

S. Lundberg, S-I. Lee, arXiv:1705.07874

Generalization of methods for XAI,

- LIME

- DeepLIFT A. Shrikumar et. al., arXiv:1605.01713

- Layer-Wise Relevance Propagation Sebastian Bach et al. In: PloS One 10.7 (2015), e0130140

Actually, they are utilizing concepts of cooperative game theory:

- Shapley regression values

- Shapley sampling values

- Quantitative Input Influence

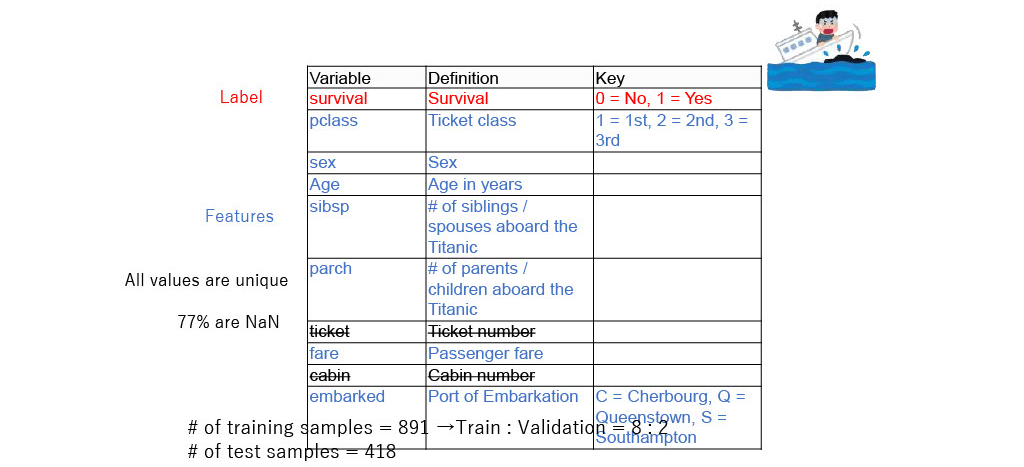

■Example: Classification (Titanic dataset)

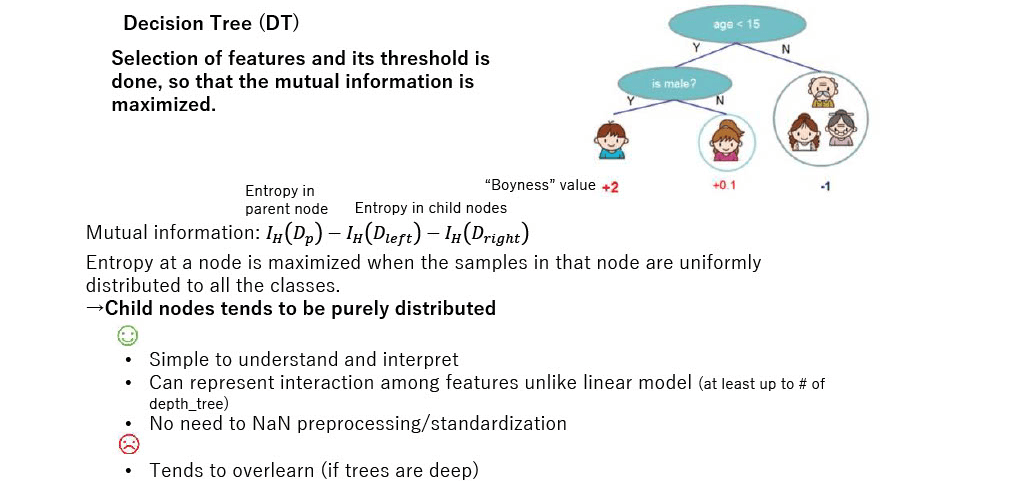

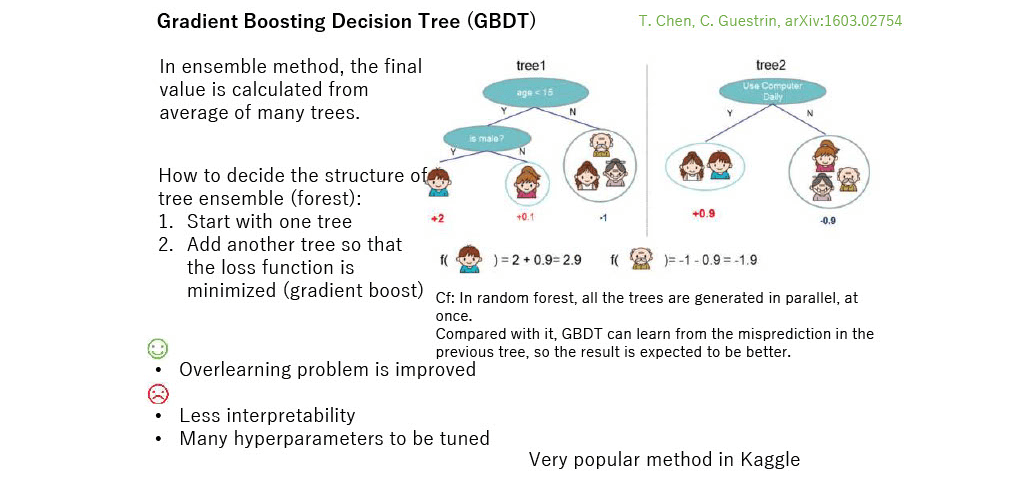

■GBDT

I used one of GBDT (XGBoost) as a ML model, under the following conditions:

- No standardization of numerical features (as DT does not need it)

- No postprocessing of NaN (GBDT treats NaN as it is)

- No feature engineering

- Hyperparameter tuning for n_estimators and max_depth (optuna is excellent)

Results:

Best parameters: {‘n_estimators’: 20, ‘max_depth’: 12}

Validation score:0.8659217877094972

Test score: 0.77033

Cf: Baseline (all women survive, all men die): 0.76555

Cf: Reported best score with ensemble method: 0.84210

Review of know-how on feature engineering by experts:

Kaggle notebook “How am I doing with my score?”

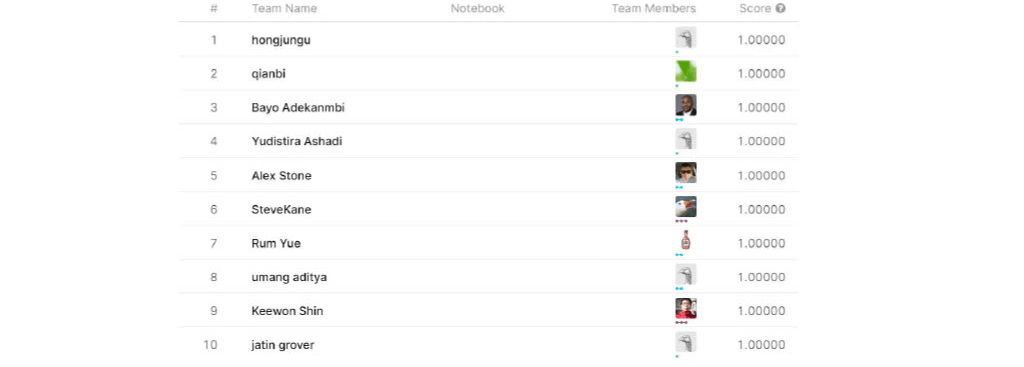

Cf: Test score at Kaggle competition

Cheaters… (Memorizing all the names of the survivors? Repeat trying with GA?)

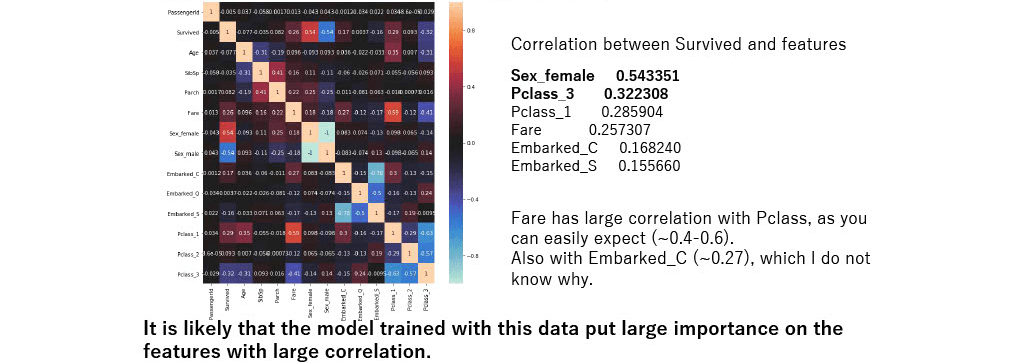

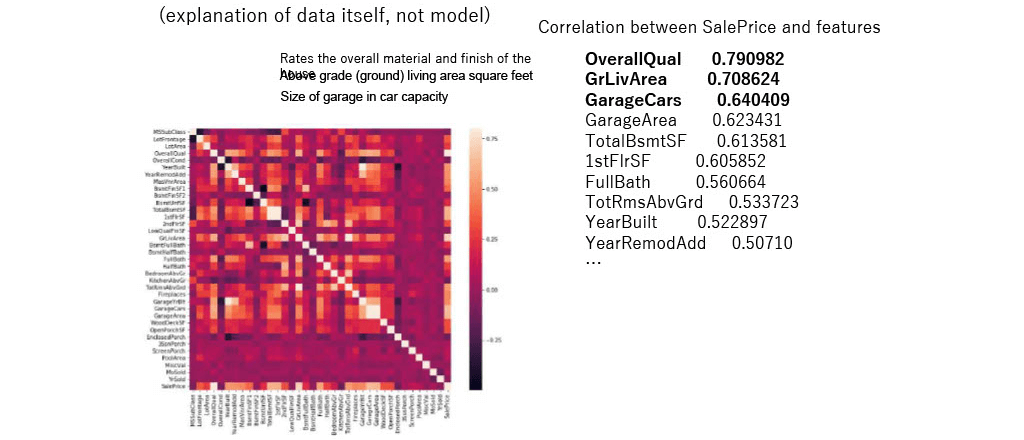

Linear correlation between features and label

(explanation of data itself, not model)

The method which you try first, to select important features.

You can work when the number of features is small.

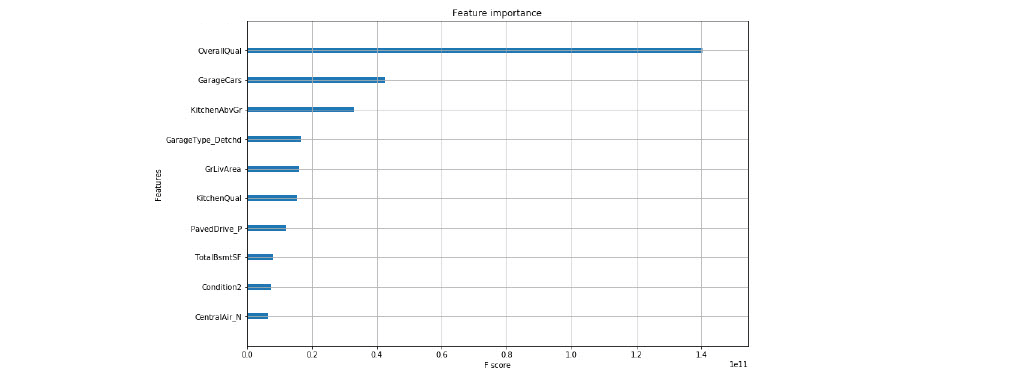

Importance of features in GBDT

(explanation of whole model, not for specific sample)

The average gain (for mutual information, or inpurity) of splits which use the feature

Top 3 agrees with that in linear correlation.

However, this method has a problem of “inconsistency”

(when a model is changed such that a feature has a higher impact on the output, the importance of that feature can decrease)

This problem is overcome by LIME/SHAP.

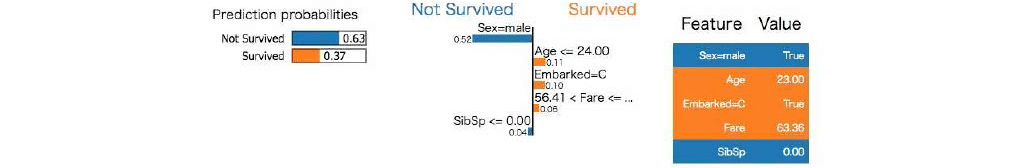

■LIME

Explanation by LIME

(explanation of model prediction for specific sample)

My code did not work on Kaggle kernel, because of a bug in LIME package…

So, here I quote results from other person.

https://qiita.com/fufufukakaka/items/d0081cd38251d22ffebf

As LIME approximates the model with linear function locally,

the weights of the features are different depending on sample.

In this sample, the top 3 features are Sex, Age, and Embarked.

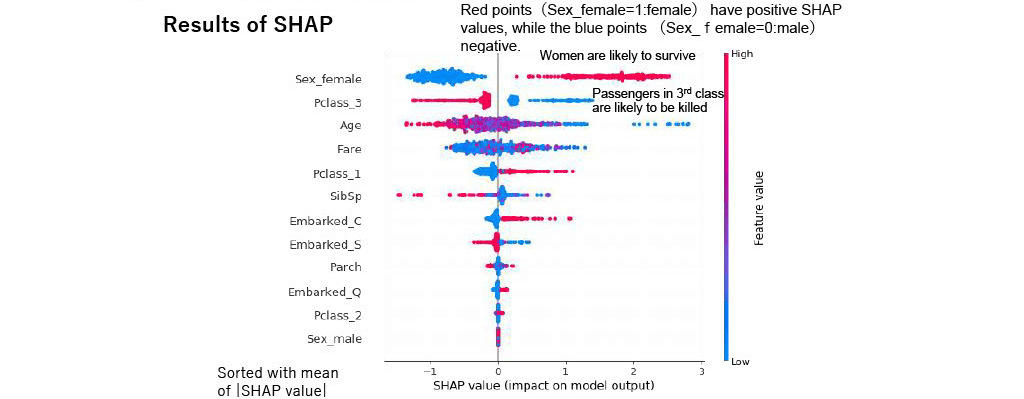

■SHAP

Top 3 does not agree with that in linear correlation/XGBoost score (Age enters).

SHAP is consistent (unlike feature importance of XGBoost) and has local fidelity (unlike linear correlation), I would trust SHAP result than the other two.

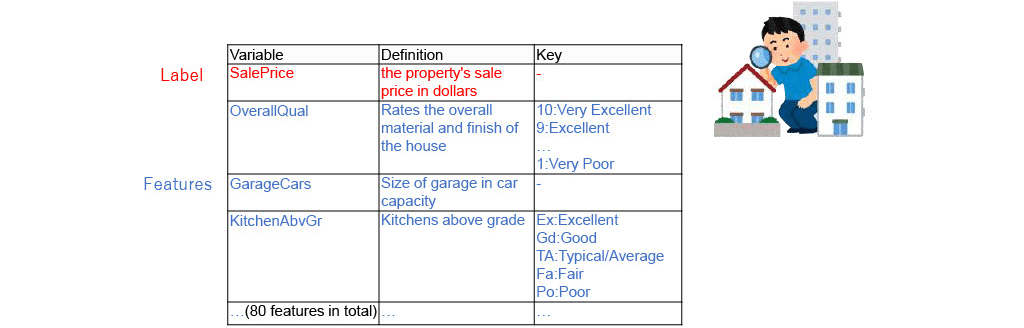

■Example: Regression (Ames Housing dataset)

Dataset describing the sale of individual residential property in Ames, Iowa, from 2006 to 2010.

# of training samples = 1460 →Train : Validation =75 : 25

# of test samples =1459

Model: XGBoost

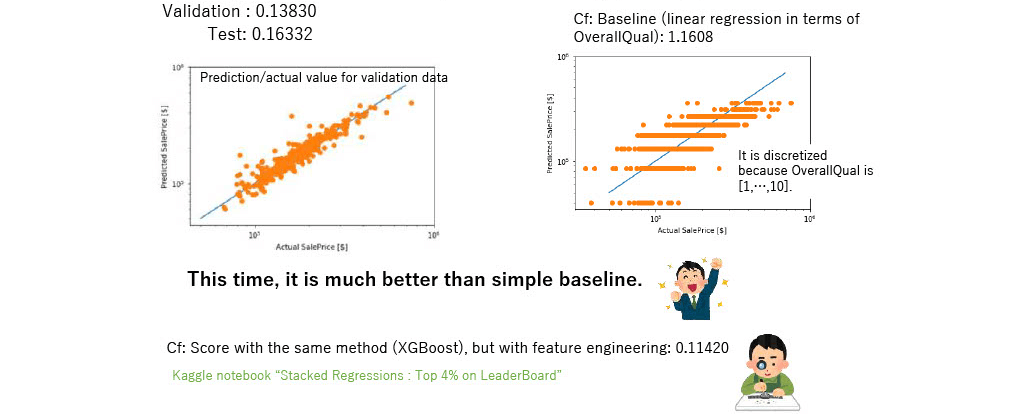

Results (metric is Root Mean Squared Error of Log (RMSEL) )

Linear correlation between features and label

Importance of features in GBDT

(explanation of whole model, not for specific sample)

Top 3 is different from linear correlation (KitchenAbvGr).

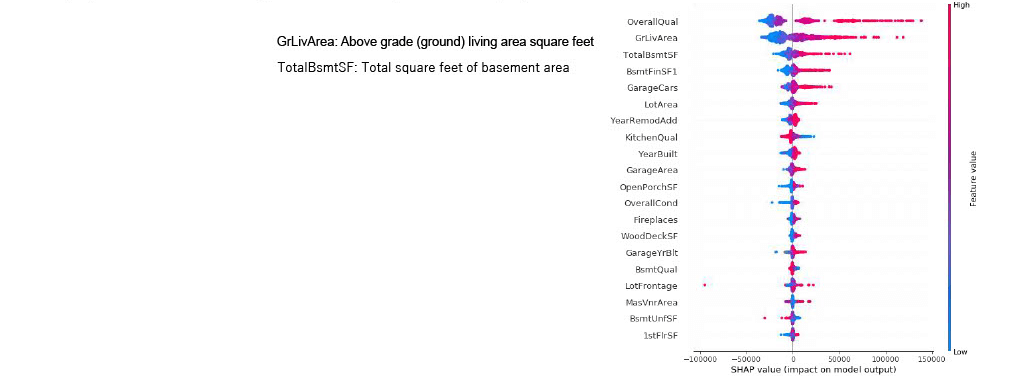

Explanation by SHAP

(explanation of model prediction for specific sample)

Top 3 is different from linear correlation/LIME.

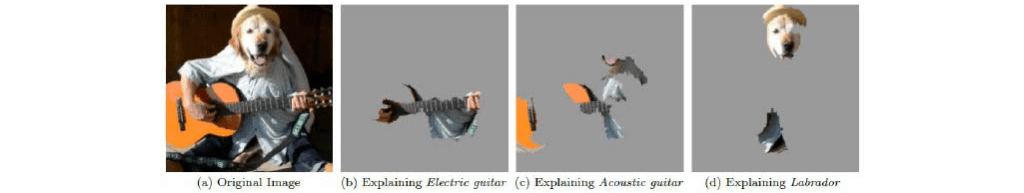

■Example: Image Classification

Results of LIME

The model seems to focus on the right places.

However, there are models which can not be approximated with LIME.

Ex: Classifier whether the image is “retro” or not considering the values of the whole pixels (sepia?)

Husky or wolf example

By looking at this explanation, it is easy to find that the model is focusing on snow.

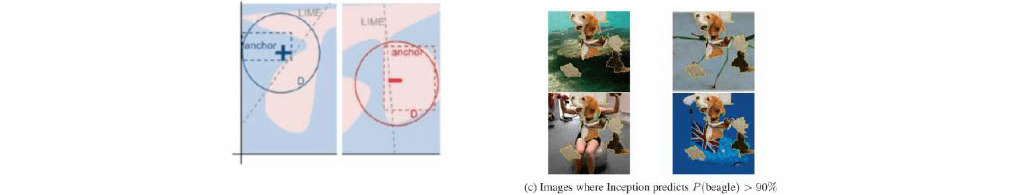

Other approaches

- Anchor M. Ribeiro, et. al., Thirty-Second AAAI Conference on Artificial Intelligence. 2018.

Gives range of features which does not change the prediction

- Influence P. Koh, P. Liang, arXiv:1703.04730

Gives training data which the prediction is based on

■Method 2: ABN for image classification

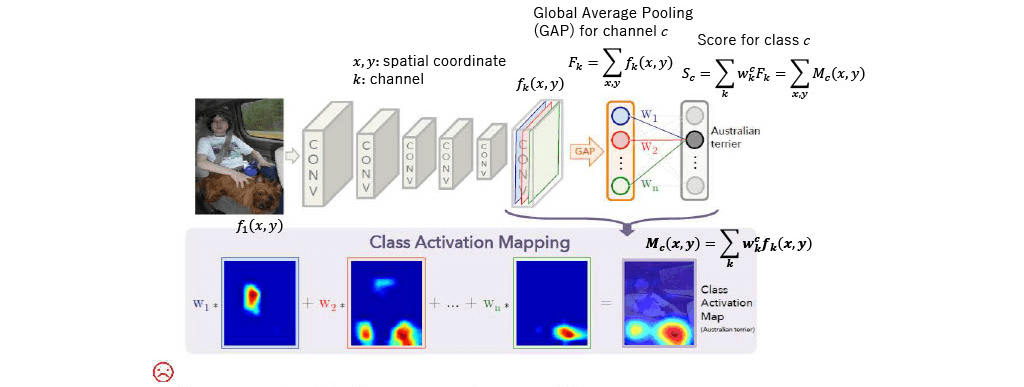

Class Activation Mapping (CAM)

B. Zhou, et. al., arXiv:1512.04150

- Decrease classification accuracy because fully- connected (Fc) layer is replaced with GAP.

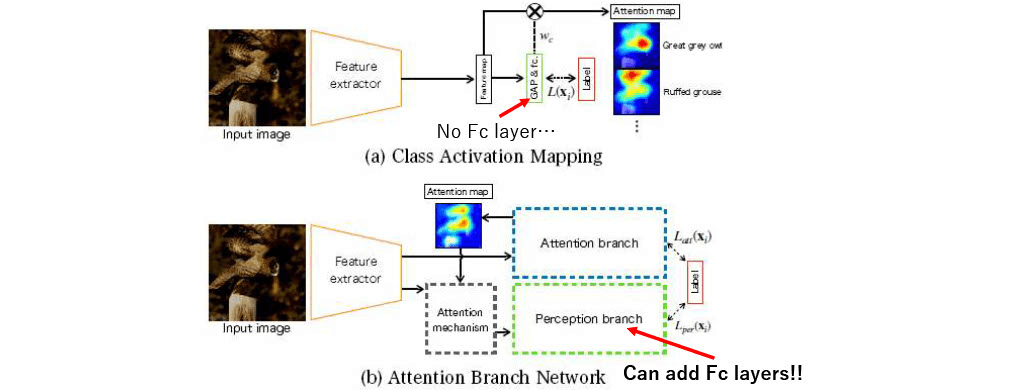

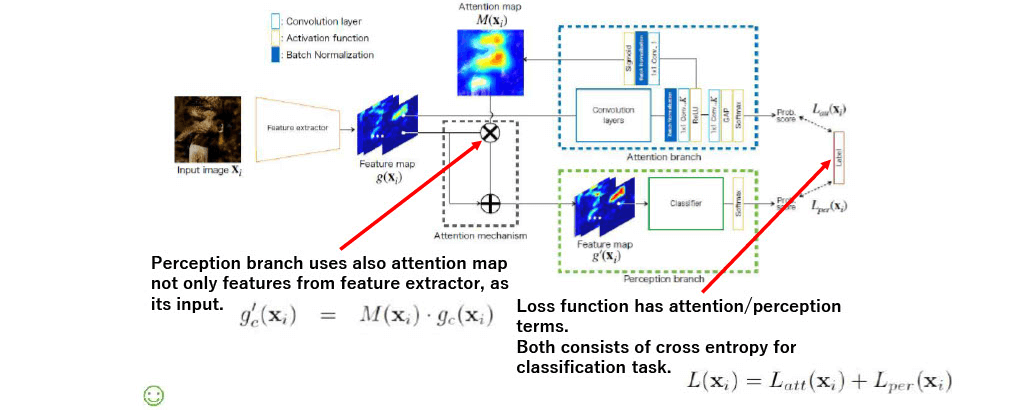

Attention Branch Network (ABN)

Basic idea: Divide attention branch from classification branch so that Fc

layers can be used in the latter branch.

H. Fukui, et. al., arXiv:1812.10025

- Improved classification accuracy because it can use Fc layers.

- Actually, using attention map in the input of perception/loss function improves accuracy.

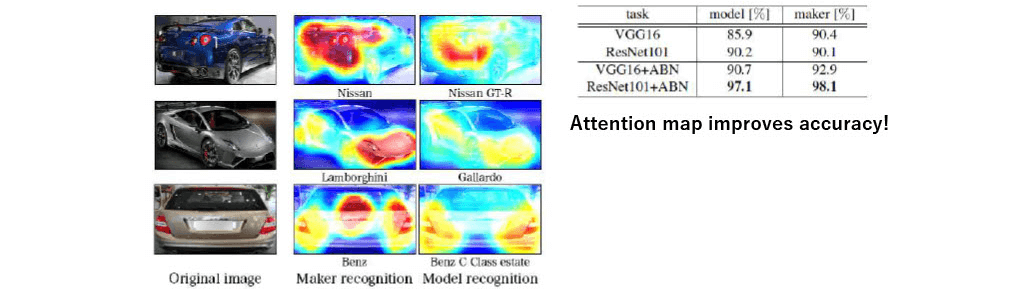

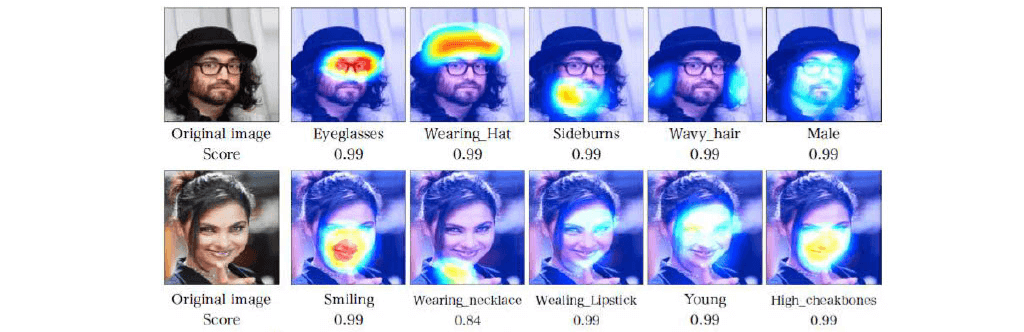

Results of ABN

As I do not have domain knowledge,

I can not judge whether the model is focusing on correct features…

Are the highlighted parts characteristic for each maker/model?

It is interesting that, the attention is paid to different parts depending on the task.

The model seems to be focusing on correct features.

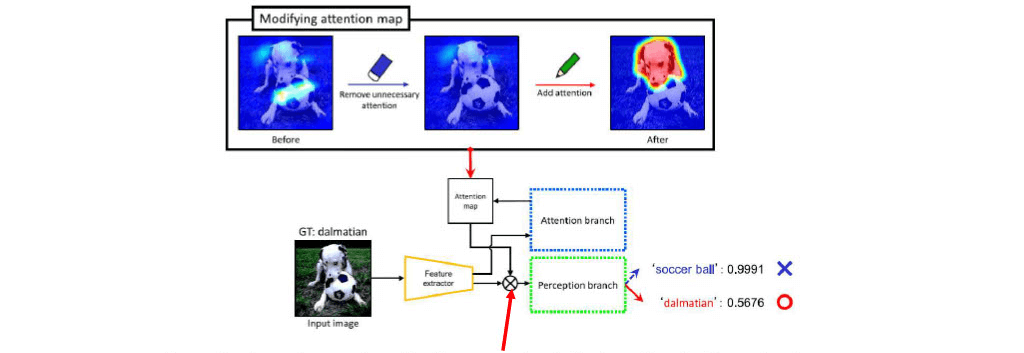

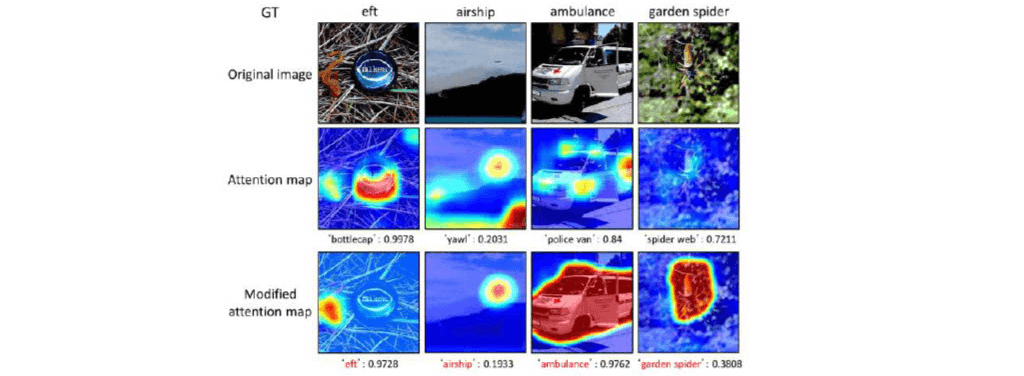

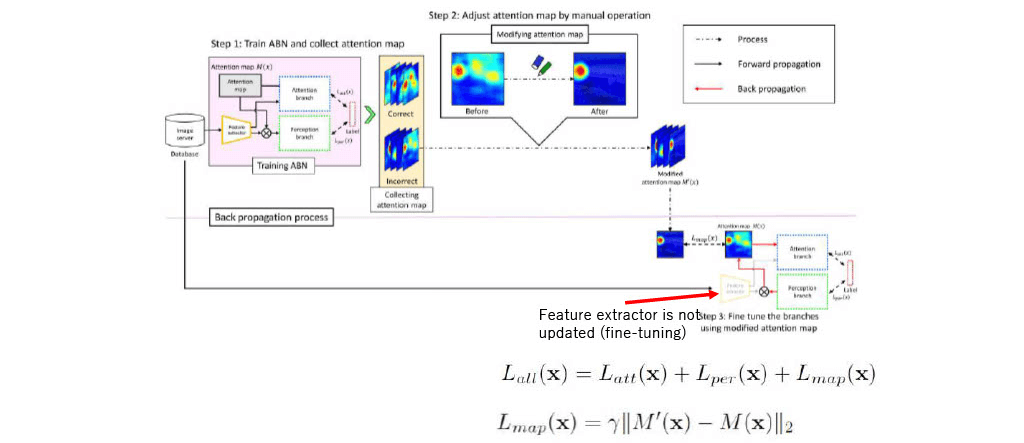

ABN and human-in-the-loop

M. Mitsuhara et. al., arXiv: 1905.03540

Basic idea: By modifying attention map in ABN using human knowledge,

try to improve the accuracy of image classifier.

Modifying attention map manually helps classification

Perception branch uses also attention map not only features from feature extractor, as its input.

Results

Modifying the attention map improved the classification accuracy

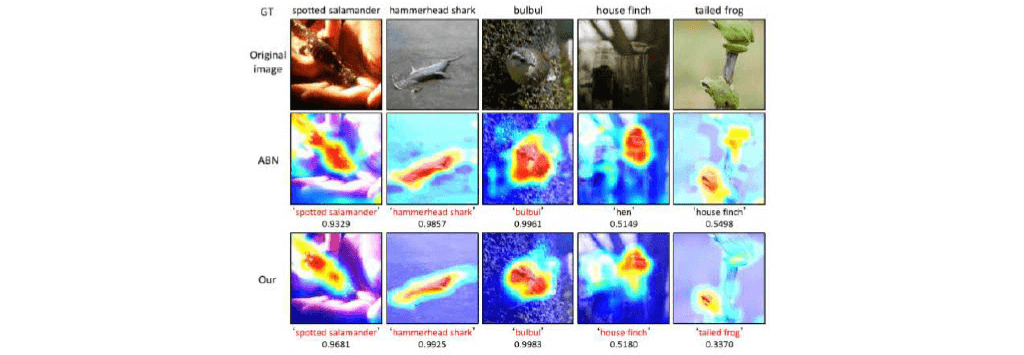

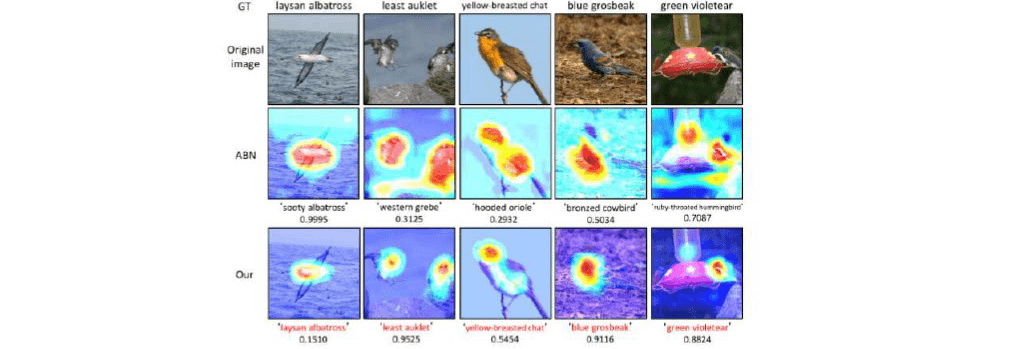

ABN with HITL

Results

HITL improves the attention map (not very apparent), and also classification accuracy.

HITL improves the attention map (focusing on relevant parts, not the whole body), and also classification accuracy.

HITL is possible because the explanation (attention map) is given as a

part of their model in ABN, and it is reused as input of perception branch.

Unlike LIME, ABN is not model-agnostic.

So maybe, being model-agnostic is not always useful:

Sometimes it is better to be able to touch the model.

■Summary

I introduced a few recent methods on XAI.

1. LIME

for

- Classification of structured data

- Regression of structured data

- Classification of image

2. ABN

for

- Classification of image

I also introduced application of human-in-the-loop to ABN

■ダウンロード

■Reference

Survey on XAI

- A. Adadi, M. Berrada, https://ieeexplore.ieee.org/document/84665 90/ • Finale Doshi -Velez, Been Kim, arXiv:1702.08608

■Codes

Notebook in Kaggle by Daisuke Sato

- “Random forest/XGBoost, and LIME/SHAP with Titanic”

- “Predict Housing price”

ArithmerBlog

ArithmerBlog