本資料は2020年10月10日に社内共有資料として展開していたものをWEBページ向けにリニューアルした内容になります。

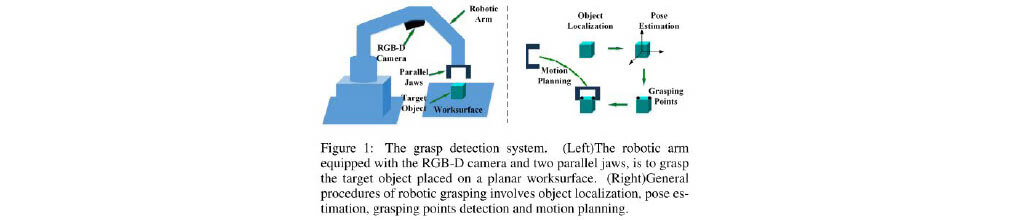

■Object Pose Estimation

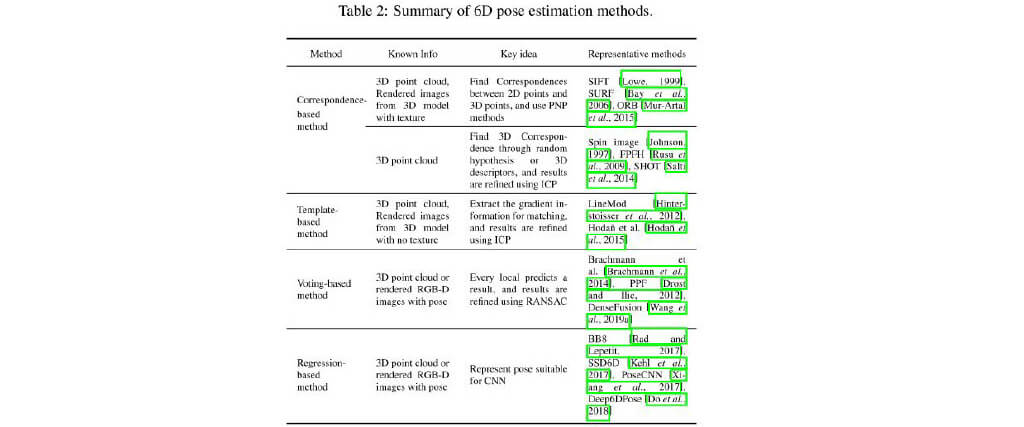

- Correspondence-based method : Find Correspondences between 2D points and 3D points (PnP, ICP)

- Template-based method: Extract the gradient information for matching, and refined(ICP)

- Voting-based method: Every local predicts a result, and refined using RANSAC

- Regression-based method: Represent pose suitable for CNN

■Summary of 6D pose estimation methods

Arxiv:1905.06658

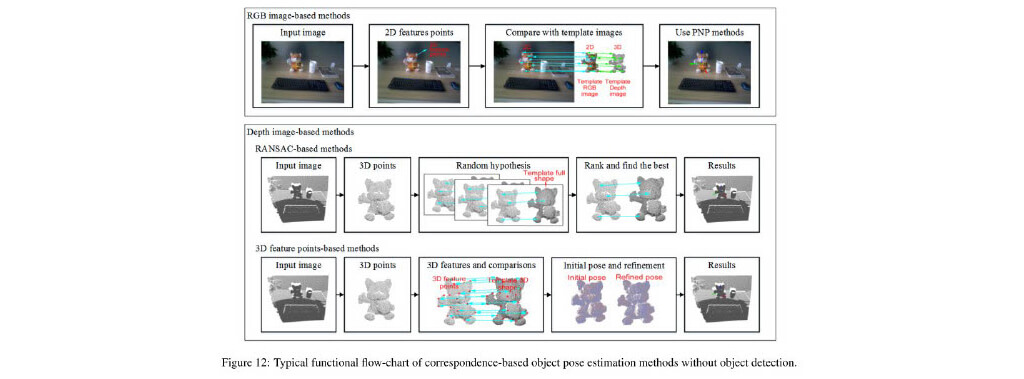

■Correspondence-based method

With rich textures to get image features

Arxiv:1905.06658

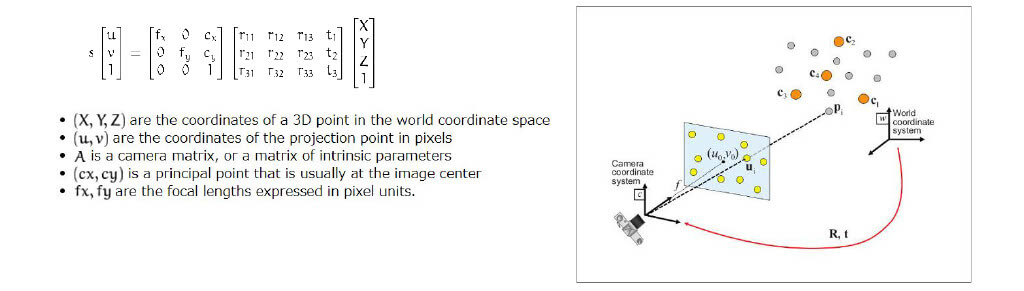

■PnP solver

Estimate extrinsic camera parameter using known 3D points in a world coordinate

There are many many solvers (e.g. P3P, P5P.., using RANSAC or Eigen value decomposition)

Arxiv:1905.06658

■ICP algorithm

Iterative matching for 2 point clouds

- Correspondence nearest neighbor points

- Translate a point cloud set to match the other sets

- iteration above

■ICP

Estimate extrinsic camera parameter using known 3D points in a world coordinate There are many many solvers (e.g. P3P, P5P.., using RANSAC or Eigen value decomposition)

Arxiv:1905.06658

■SegICP SegNet

SegICP

Segmentation and depth image-based partial Registration to obtain object’s pose

SegNet

Multi-hypothesis object pose: Point-to-point ICP

1cm pose error and < 5°error

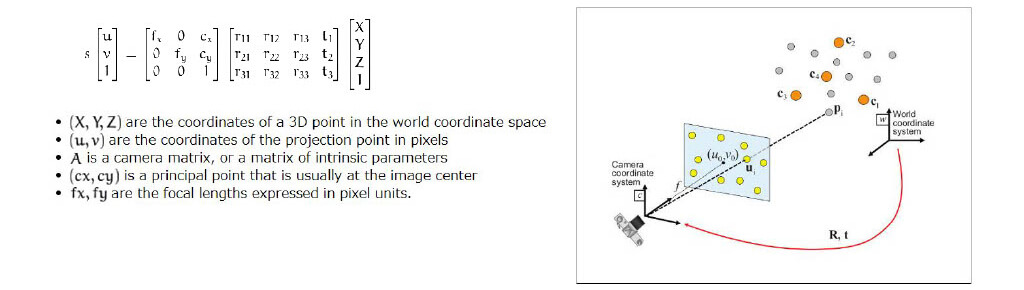

■Template-based method

Using gradient information in RGB-D or RGB image Without rich texture

ICP

Arxiv:1905.06658

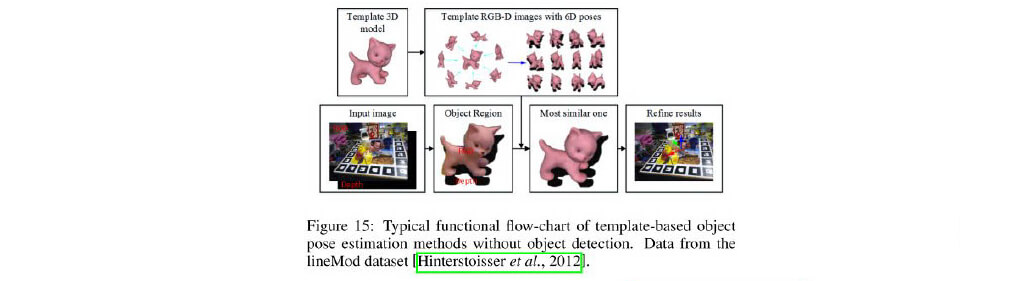

■Voting-based method

Objects with occlusions Dense class labeling

Arxiv:1905.06658

■Regression-based method

PoseCNN

- Localizing the center of objects

- Predicting its distance from camera

- Regressing rotation to a quarternion

PoseCNN architecture

- Feature extraction

- Embedding

- Classification and regression

Two loss function for quaternion

- PoseLoss

- ShapeMatch-Loss

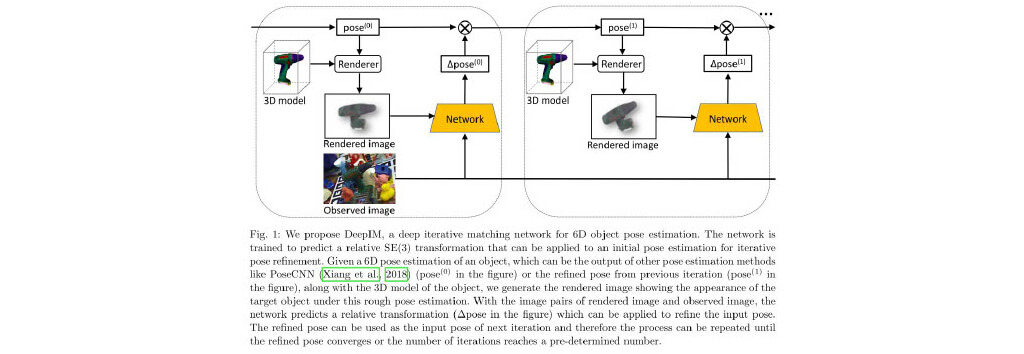

DeepIM

Iterative refinement using DNN

Enable to combine other estimator

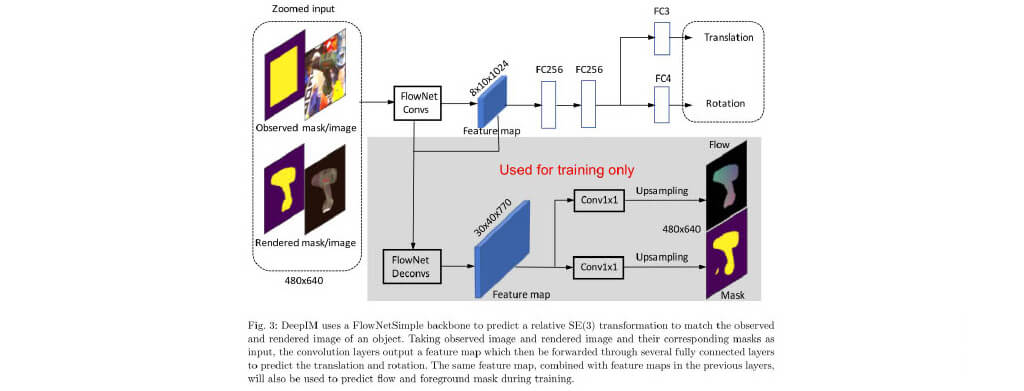

DeepIM

Iterative refinement using DNN

Flownet: estimate optical flow

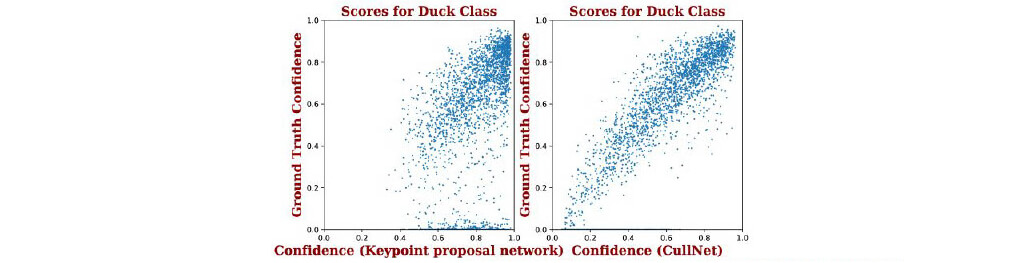

CullNet

Single view image-based object pose estimation

Culling false positives Calibrate the confidence score

■Correspondence-based and Regression-based method

CullNet Architecture

Using Yolov3 for keypoints

Cullnet output confidence of objects pose by PnP.

■Voting-based and Regression-based method

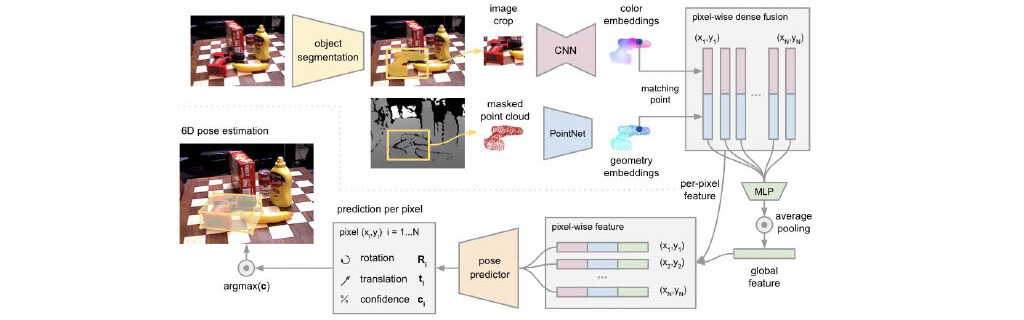

DenseFusion

Estimating 6D pose of known objects

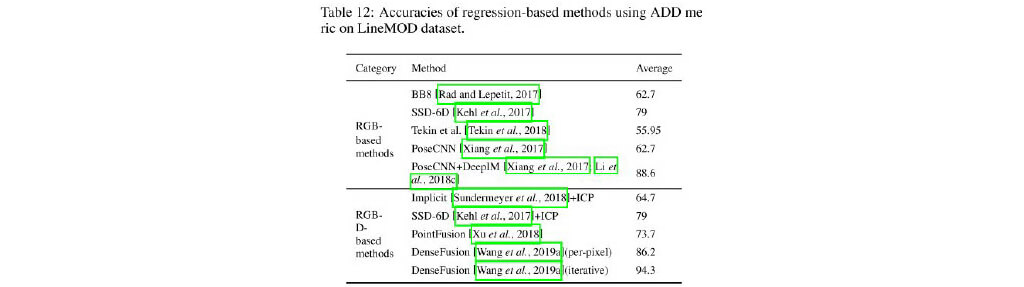

■Comparison

ADD (Average Distance of Model Point)

ADD-S (for symmetric objects

Error smaller than threshold(< 2cm)

From the tables, we can see that DenseFusion achieved the highest accuracy comparing with other regression-based methods. novel local feature fusion scheme using both RGB and depth images

ArithmerBlog

ArithmerBlog